This post presents a summary of the background and class discussion on the Adam (adaptive moment estimation) algorithm. The primary source for this discussion was the original Adam paper. Adam is quite possibly the most popular optimization algorithm being used today in machine learning, particularly in deep learning.

Background (Ryan Kingery)

Adam can be thought of as a generalization of stochastic gradient descent (SGD). It essentially combines three popular additions to SGD into one algorithm: AdaGrad, Nesterov momentum, and RMSProp.

Adam = AdaGrad + momentum + RMSProp

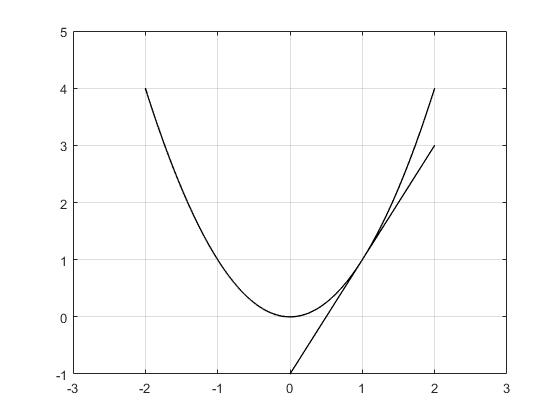

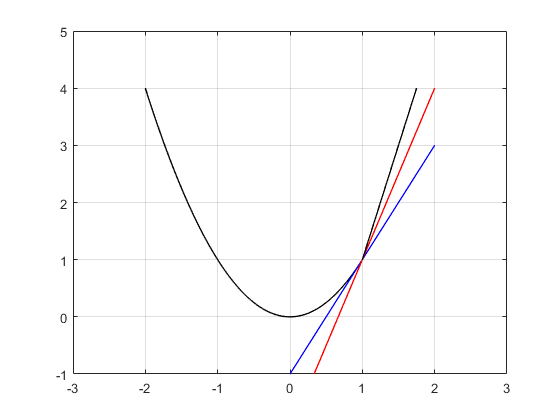

Recall from a previous post that AdaGrad modifies SGD by allowing for variable learning rates that can hopefully take advantage of the curvature of the objective function to allow for faster convergence. With Adam we extend this idea by using moving averages of the gradient moment estimates to smooth the stochastic descent trajectory. The idea is that smoothing this trajectory should speed up convergence by making Adam converge more like batch gradient descent, but without the need of using the entire dataset.

To discuss momentum and RMSProp we must recall the definition of an exponentially weighted moving average (EWMA), also called exponential smoothing or infinite impulse response filtering. Suppose we have a random sample of time-ordered data. An EWMA generates a new sequence

defined by

The hyperparameter is called a smoothing parameter. Intuitively, the EWMA is a way of smoothing time-ordered data. When

, the EWMA sequence depends only on the current value of

, which just gives the original non-smoothed sequence back. When

, the EWMA doesn’t depend at all on the current value

, which results in a constant value for all

.

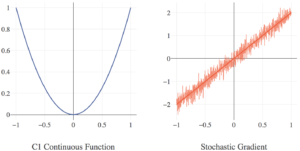

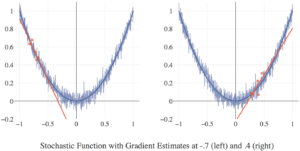

The EWMA is useful when we’re given noisy data and would like to filter out some of the noise to allow for better estimation of the underlying process. We thus in practice tend to favor higher values of , usually 0.9 or higher. It is precisely this idea that momentum and RMSProp exploit. Before mentioning this, though, a minor point.

It turns out that the EWMA tends to produce bias estimates for the early values . When the process hasn’t generated enough data yet, EWMA sets all the remaining values it needs to 0, which tends to bias the earlier part of the EWMA sequence towards 0. To fix this problem, one can use instead a bias-corrected EWMA defined by

One might naturally ask whether this bias correction term is something to worry about in practice, and the answer, of course, is it depends. The time it takes for the bias to go away goes roughly like . Thus, the larger

is the longer it takes for this bias effect to go away. For

it takes about 30 iterations, and for

it takes about 3000 iterations.

In the context of machine learning though, it doesn’t really make much of a difference when you’re training for many thousands of iterations anyway. To see why, suppose you have a modest dataset of 10,000 observations. If you train this using a mini-batch size of 32, that gives about 312 iterations per epoch. Training for only 10 epochs then already puts you over 3000 iterations. Thus, unless you’re in a scenario where you can train your data very quickly (e.g. transfer learning), the bias correction probably won’t be important to you. It is thus not uncommon in practice to ignore bias correction when implementing EWMA in machine learning algorithms.

Now, the Adam algorithm uses EWMA to estimate the first and second order moment estimates of the objective function gradient . Let us then briefly review what the moment of a random variable is.

Recall that the moment

of a random variable

is defined by

We can see then that the first moment is just the expectation , and the second moment is the uncentered variance

For a random vector , we can extend the definition by defining the expectation component-wise by

and the second moment component-wise by

Back to Adam again, denote the gradient by

and the element-wise square of the gradient by

. We then take an EWMA of each of these using smoothing parameters

:

(momentum)

(RMSProp)

In practice, it’s common to take and

, which from the above discussion you can see favors high smoothing. We can now state the Adam parameter update formally:

Note that the bias corrected terms are included in the update! This is because the authors of the paper did so, not because it’s usually done in practice, especially for the momentum term . Also note the presence of the

. This is included to prevent numerical instability in the denominator, and is typically fixed in advance to a very small number, usually

. You can think of this as a form of Laplace smoothing if you wish.

The ratio is used to provide an estimate of the ratio of moments

, which can be thought of as a point estimate of the best direction in which to descent and the best step size to take. Note that these are ratios of vectors, meaning that means that the divisions are assumed to be done component-wise.

We thus now have a “smoother” version of AdaGrad that allows for robustness in the presence of the noise inherent in SGD. Whether the algorithm holds in practice is a question that has repeatedly been validated in numerous deep learning applications. Whether this actually helps in theory leads to a discussion of the algorithm’s convergence “guarantees”.

Convergence (Colin Shea-Blymyer)

The proof of convergence for this algorithm is very long, and I fear that we won’t gain much from going through it step-by-step. Instead, we’ll turn our attention to an analysis of the regret bounds for this algorithm.

To begin, we’ll look at what regret means in this instance. Formally, regret is defined as

In English, that represents the sum how far every step of our optimization has been from the optimal point. A high regret means our optimization has not been very efficient.

Next we’ll define as the gradient of our function at the point we’ve reached in our algorithm at step

, and

as the

element of that gradient. We’ll use slicing on these gradients to define

That is, a vector that contains the element of all gradients we’ve seen. We’ll also define

as

, which is a ratio of the of the decay of the importance of the first and second moments, squared and square-rooted, respectively. Further,

represents the exponential decay rate of

.

The next step is to make some assumptions. First, assume the gradients of our function are bounded thus:

, and

. Second, we assume a bound on the distance between any two points discovered by Adam:

Finally, we define some ranges for our parameters: , and

; the learning rate at a given step

is

; finally,

for

Now we are armed to tackle the guarantee given for all :

To make analysis of this easier, we’ll refer to specific terms in this inequality as such:

Allowing each summation, and fraction without summation be a separate term.

Term is saying that a large maximum distance between points discovered by Adam can allow for a larger regret, but can be tempered by a large learning rate, and a smaller decay on the first moment. This is scaled by

. Recall that

is the bias-corrected second moment estimate, so

refers to the amount of variance in the function, scaled by the step number – more variance allows for more regret, especially in the later iterations of our method.

has a lot to say.

We’ll start with the numerator: the learning rate, the decay of the first moment, and the maximum Chebyshev magnitude of our gradients – all aspects that allow for a larger regret when endowed with large values. In the denominator we have subtractive terms, so to maximize regret, the decay of the second and first moments, and (something like) the ratio between them should all be small. scales

much in the same way

scaled

– instead of referring to the variance (the second moment), as

does,

is looking at the magnitudes of vectors formed by piecing together all previous gradients.

This, for me, is the hardest part to gain an intuition of. Finally, we find ourselves at . This piece is tricky because it’s opaque as to what the index for the summation refers to. Disregarding that, however, we see the maximum (Chebyshev) distance between points discovered (squared) multiplying the maximum (Chebyshev) norm of the gradients, multiplying a term that was in the denominator in

– one less the decay rate on the second moment. In the denominator of

we see the first bit of the denominator of

– the learning rate, and one less the decay rate on the first moment, and one less the decay of the decay of the first moment (squared).

Looking back through this, we can start to see how certain terms effect how large the regret of the algorithm can be: the term is never found in the numerator, though

is, suggesting that high values of

lead to lower values of

. Inversely,

and

are never found in the denominator, clearly stating that smaller bounds on the distances and gradients lead to smaller regret values.

Finally, by accepting that

we find that . This allows us to say that the regret of Adam converges to 0 in the limit as

.