1.Introduction

The Frank-Wolfe (FW) optimization algorithm has lately regained popularity thanks in particular to its ability to nicely handle the structured constraints appearing in machine learning applications.

In this section, consider the general constrained convex problem of the form:

Where is a finite set of vector that we call atoms. Assume that the function f is

-strongly convex with L-Lipschitz continuous gradient over M.

2. Original Frank-Wolfe algorithm

The Frank-Wolfe (FW) optimization algorithm, also known as conditional gradient, is particularly suited for the setup. It works as follows:

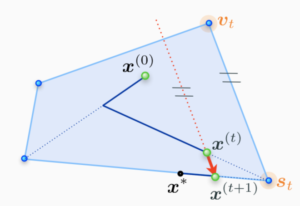

At a current iterate x^(t), the algorithm finds a feasible search atom st to move towards by minimizing the linearization of the objective function f over M – this is where the linear minimization oracle LMOa is used.

The next iterate x^(t+1) is then obtained by doing a line-search on f between x^(t) and st.

3. Variants of Frank-Wolfe algorithm

3.1 Away-Frank-Wolfe Algorithm

However, there is zig-zagging phenomenon. In order to address this phenomenon, away-Frank-Wolfe algorithm is proposed. Add the possibility to move away from an active atom in .

3.2 Pairwise Frank-Wolfe Algorithm

pairwise Frank-Wolfe algorithm is also called MDM algorithm. At first, it proposed to address the polytope distance problem. It hopes to move weight mass between two atoms in each step. According to the algorithm 3, we can know that is almost algorithm 2, except .

4. Global Linear Convergence Analysis

In this paper, section 2 is talking about global linear convergence analysis. We can see that at first, it introduce the proof elements and convergence proof. And lately, section 2.2 is talking convergence results.

4.1 proof of convergence

4.1.1

As f has the L-Lipschitz gradient, we can get

(1)

Choose to minimize RHS:

,when

is big enough.

4.1.2

By -strong convexity of f, we can get (with

):

Use =1 on LHS get:

4.1.3

combining 4.1.1 and 4.1.2, we can get

Thus, lower bounds * to get linear convergence rate.

5. Conclusion

This paper reviews variant of FW algorithm and shows for the first time global linear convergence for all variants of FW algorithm. It introduces of the notion of the condition number of the constraint set.

6. Reference

1. Simon Lacoste- Julien & Martin Jaggi: On the global linear convergence of Frank-Wolfe optimization variants.

2. Simon Lacoste- Julien & Martin Jaggi: http://www.m8j.net/math/poster-NIPS2015-AFW.pdf

3. R. Denhardt-Eriksson & F. Statti: http://transp-or.epfl.ch/zinal/2018/slides/FWvariants.pdf