King, Gary, Jennifer Pan, and Margaret E. Roberts. “Reverse-engineering censorship in China: Randomized experimentation and participant observation.” Science 345.6199 (2014): 1251722.

Hiruncharoenvate, Chaya, Zhiyuan Lin, and Eric Gilbert. “Algorithmically Bypassing Censorship on Sina Weibo with Nondeterministic Homophone Substitutions.” ICWSM. 2015.

Summary 1

King et al. conducted a large-scale experimental study of censorship in China by creating their own social media websites, submitting different posts, and observing how these were reviewed and/or censored. They obtained technical know-how in the use of automatic censorship software from the support services of the hosting company. Based on user guides, documentation, and personal interviews, the authors deduced that most social media websites in China conduct an automatic review through keyword matching. The keywords are generally hand-curated. They reverse-engineer the keyword list by posting their own content and observing which posts get through. Finally, the authors find that posts that invoke collective action are censored as compared to criticisms of the government or its leaders.

Reflection 1

King et al. conduct fascinating research in the censorship domain. (The paper felt as much a covert spy operation as research work). The most interesting observation from the paper is that posts about collective action are censored, but not those that criticise the government. This is labeled the collection action hypothesis vs. state critique hypothesis. This means two things – negative posts about the government are not filtered, and positive posts about the government can get filtered. The paper also finds that automated reviews are not very useful. The authors observe a few edge cases too – posts about corruption, wrongdoing, senior leaders in the government (however innocuous their actions might be), and sensitive topics such as Tibet are automatically censored. These may not bring about any collective action, either online or offline, but are still deemed censor-worthy. The paper makes the claim that certain topics are censored irrespective of whether they are for or against the topic.

I came across another paper by the same set of authors from 2017 – King, Gary, Jennifer Pan, and Margaret E. Roberts. “How the Chinese government fabricates social media posts for strategic distraction, not engaged argument.” American Political Science Review 111.3 (2017): 484-501. If censorship is one side of the coin, then bots and sockpuppets constitute the other. It would not be too difficult to imagine “official” posts by the Chinese government that favor their point of view and distract the community from more relevant issues.

The paper threw open several interesting questions. Firstly, is there punishment for writing posts that go against the country policy? Secondly, the Internet infrastructure in China must be enormous. From a systems scale, do they ensure each and every post goes through their censorship system?

Summary 2

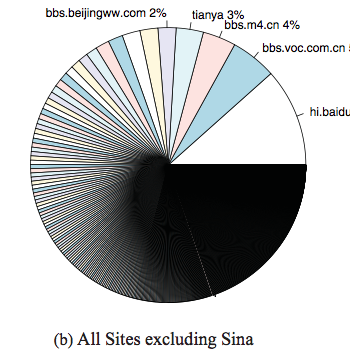

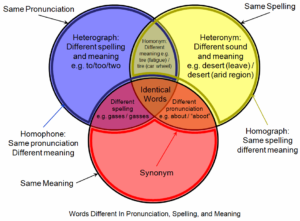

The second paper by Hiruncharoenvate et al. carries the idea of keyword-based censoring forward. They base their paper on the observation that activists have employed homophones of censored words to get past automated reviews. The authors develop a non-deterministic algorithm that generates homophones for the censored keywords. They suggest that homophone transformations would cost Sina Weibo an additional 15 hours per keyword per day. They also find that posts with homophones tend to stay 3 times longer on the site on average. The authors also tie up the paper by demonstrating that native Chinese readers did not face any confusion while reading the homophones – i.e. they were able to decipher their true meaning.

Reflection 2

Of all the papers we have read for the Computational Social Science, I found this paper to be the most engaging, and I liked the treatment of their motivations, design of experiments, results, and discussions. However, I also felt disconnected because of the language barrier. I feel natural language processing tasks in Mandarin can be very different from that in English. Therefore, I was intrigued by the choice of algorithms (tf-idf) that the authors use to obtain censored keywords, and then further downstream processing. I am curious to hear how the structure of Mandarin influences NLP tasks from a native Chinese speaker!

I liked Experiment 2 and the questions in the AMT task. The questions were unbiased and actually evaluate if the person understood which words were mutated.

However, the paper also raised other research questions. Given the publication of this algorithm, how easy is it to reverse engineer the homophone generation and block posts that contain the homophones as well? They keyword-matching algorithm could be tweaked just a little to add homophones to the list, and checking if several of these homophones occurred together or with other banned keywords.

Finally, I am also interested in the definitions of free speech and how they are implemented across different countries. I am unable to draw the line between promoting free speech and respecting the sovereignty of a nation and I am open to hearing perspectives from the class about these issues.

Read More