[1] Hiruncharoenvate, Chaya, Zhiyuan Lin, and Eric Gilbert. “Algorithmically Bypassing Censorship on Sina Weibo with Nondeterministic Homophone Substitutions.” ICWSM. 2015.

[2] King, Gary, Jennifer Pan, and Margaret E. Roberts. “Reverse-engineering censorship in China: Randomized experimentation and participant observation.” Science 345.6199 (2014): 1251722.

Summary[1]

The paper published by King and colleagues in 2014, researchers did not understand how the censorship apparatus works on sites like SinaWeibo, which is the Chinese version of Twitter. The censored weibos censored weibos were collected for the duration between October 2, 2009-November 20, 2014 and is comprised with approximately 4.4K weibos. The two experiments that the authors use rely on this dataset. Namely, an experiment on Sina Weibo itself and a second experiment where they ask trained users from Amazon Mechanical Turk to recognize the homophones. The second dataset consists of weibos from the public timeline of Sina Weibo, from October 13,2014–November 20,2014, accumulating 11,712,617 weibos.

Reflections[1]

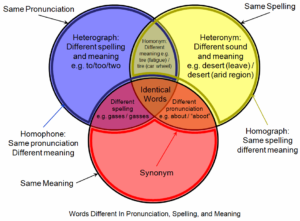

I’ve never heard of the term “homophone” before. Apparently, such as decomposition of characters, translation, and creating nicknames—to circumvent adversaries, creating Morphs have been in wide usage. Homophones are a subset of such morphs. The Venn Diagram also provides further insight. Overall, three questions are asked. First, are homophone-transformed posts treated differently from ones that would have otherwise been censored? Second, are homophone-transformed posts understandable by native Chinese speakers? Third, if so, in what rational ways might SinaWeibo’s censorship mechanisms respond? One question that I have is whether utilizing tf-idf score is the best possible choice for their analysis. Why not an LDA? I didn’t find a discussion regarding this choice of the model, even though it is detrimental to the results. The algorithm, as the authors suggest, has a high chance to generate homophones that have no meaning since they did not consult a dictionary. I find this to also have a serious impact in the model. This might look like a detail, but I think it might have been a better idea to keep the Amazon Turk instructions only in Mandarin, instead of asking in English that non-Chinese speakers not to complete the task. It would have been helpful if we had all the parameters of the logit model in a table. Regardless, they find that

Questions[1]

- Is the usage of homophones particularly that widespread in mandarin, compared to the Indo-European Language Family? Furthermore, can these methods be applied to other languages?

- How much of a complex conversation can occur with the usage of homophones? Is there language complexity metric, with complexity defined as some metric that conveys ideas effectively?

- An extension of the study could be the study of the linguistic features of posts containing homophones.

Summary [2]

The paper written by King et al has two parts. First, they create accounts on numerous Chinese social media sites. Then, they randomly submit different text and observe which texts are censored and which weren’t. The second task involves the establishment of a social media site, that uses Chinese media’s censorship technologies. Their goal is to reverse engineer the censorship process. Their results support a hypothesis, where criticism of the state, its leaders, and their policies are published, whereas posts about real-world events with collective action potential are censored.

Reflections [2]

This is an excellent paper in terms of causal inference and the entire structure. Gary King is a notorious author in experimental design studies aimed to draw causal inference. For the experimental part, first they introduce blocking based on the writing style. I didn’t find much about the writing style on the supplemental material. They also have a double dichotomy, that produces four experimental conditions: pro- or anti-government and with or without collective action potential. It is the randomization that allows to draw causal claims in the study.

Questions [2]

- How do they measure the “writing style”, when they introduce blocking?