- Bond, Robert M., et al. “A 61-million-person experiment in social influence and political mobilization.” Nature 489.7415 (2012): 295.

- Kramer, Adam DI, Jamie E. Guillory, and Jeffrey T. Hancock. “Experimental evidence of massive-scale emotional contagion through social networks.” Proceedings of the National Academy of Sciences 111.24 (2014): 8788-8790.

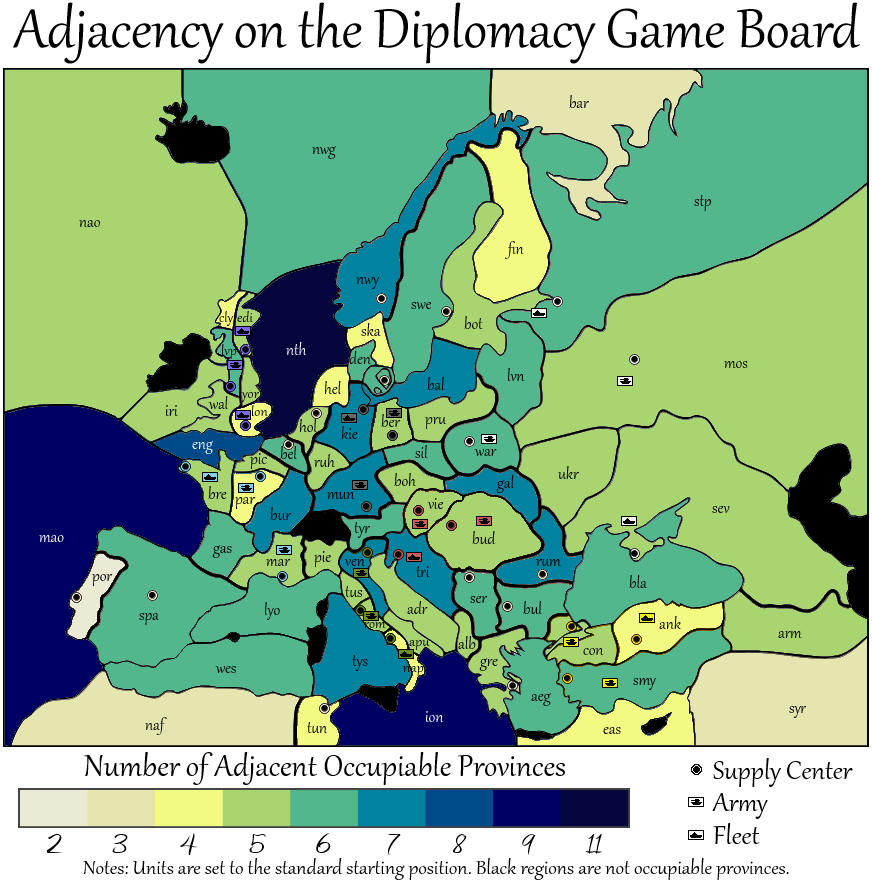

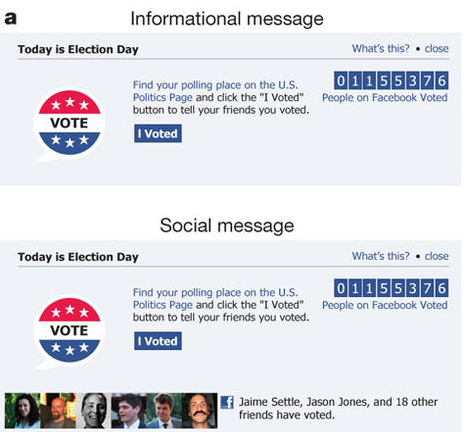

Both the papers provide interesting analysis of user generated data on Facebook. As far as I remember, the key idea behind the first paper was briefly discussed in one of the early lectures. While, there might be some ethical concerns regarding the data collection, usage and human subject consent in both the studies, I find the papers to be very relevant and thought provoking in today’s world where social media is more or less an indispensable part of everyone’s lives. The first paper by Bond et. al. discusses a randomized controlled trial of political mobilization messages on 61 million Facebook users during the 2010 U.S. congressional elections. The experiment showed an ‘informational’ or ‘social’ message at the top of the news feed of Facebook users in the U.S. (18 years of age and above) as shown in the image below.

Approximately 60 million users where shown the social message, 600 K users were shown the social message and 600 K users were not shown any message adding up to the ‘61-million-person’ sample advertised in the title. The key finding of this experiment was that messages on social media directly influenced political self-expression, information seeking and real-world voting behavior of people (at least those on Facebook). Additionally, ‘close-friends’ in a social network (a.k.a. strong ties) are responsible for the transmission of self-expression, information seeking and real-world voting behavior. In essence, strong ties play a more significant role in spreading online and real-world behavior as compared to ‘weak ties’, in online social networks. Next, I summarize my thoughts on this article.

The authors find that users who received the social message (instead of the plain informational message) where 2.08% more likely to click on the ‘I Voted’ button. This seems to suggest a causality between the presence of images of friends who pushed the ‘I Voted’ button and the user’s decision to push the ‘I Voted’ button. I am not convinced with this suggestion because of the huge difference in the sample size of the social and informational message groups. I believe online social networks are complex systems and spread of behaviors (contagions) in such systems is a non-linear and emergent phenomenon. I feel that ignoring the differences between the two samples (in terms of network size and structure) is a little unreasonable while making such comparisons at the gross level. I feel this particular result will be more convincing if the two samples were relatively similar and the findings were consistent for repeated experiments. Another interesting analysis could be to look at, which demographic segments are influenced more by the social messages as compared to the informational messages. Is the effect, reversed for certain segments of the user population? Lastly, approximately 12.8% of the 2.1 billion user accounts on Facebook are either fake or duplicate. It would be interesting to see how these accounts would affect the results published in this article.

The second article by Kramer et. al. suggests that emotions can spread similar to contagions, from one user to another in online social networks. The article presents an experiment wherein the amount of positive and negative posts in the News Feed of Facebook users was artificially reduced by 10%. The key observation was that, when positive posts were reduced the amount of positive words in the affected user’s status updates decreased. Similarly, when negative posts were reduced the amount of negative words in the affected user’s status updates decreased. I think this result suggests that people innately reciprocate the emotions they experience (even in the absence of nonverbal cues) acting like feedback loops. I feel that the weeklong study described in the article is somewhat insufficient to support the results. It might also be more convincing if the experiment was repeated and the observations remained consistent each time. Another thing that I feel is missing in the article is statistics about the affected users status updates, i.e. what was the mean, std. dev. of the number status updates posted by the users. Additionally, it is important to know if the users posted status updates only ‘after’ reading their News Feeds? And if this ‘temporal’ information is captured in the data at all? Based on my limited observations on Facebook status updates, I feel most of the time they relate to the daily experiences of the user. For example, visit to a restaurant, a promotion, successful defense, holidays or trips. I feel it’s very important that we avoid ‘Apophenia’ when it comes to this kind of research. Also, it is unclear to me why the authors have used Poisson regression here and what is the response variable?