The Natural Language Toolkit (NLTK) is a suite of function libraries for the Python programming language. Each of these are designed to work with natural language corpora — bodies of text generated from human speech or writing. NLTK assists natural language processing (NLP), which the NLTK developers define broadly as “computer manipulation of natural language.”

Uses for the various libraries contained within the NLTK suite include, but are not limited to: data mining, data modeling, building natural-language based algorithms and exploring large natural language corpora. The NLTK site notes the following: “[NLTK] provides basic classes for representing data relevant to natural language processing; standard interfaces for performing tasks such as part-of-speech tagging, syntactic parsing, and text classification; and standard implementations for each task which can be combined to solve complex problems.” Moreover, there is extensive documentation that “covers every module, class and function in the toolkit, specifying parameters and giving examples of usage.”

I discovered NLTK because I am interested in automated sentiment and valence analysis. Having had a very brief exposure to Python, I was aware that is has a gentle learning curve, and have been confident that I can learn enough Python to use some of its more simple sentiment analysis functions. However, I’m rusty with programming, and I keep running into roadblocks. Ultimately my goal is to use one or more of NLTK’s sentiment analyses libraries to explore certain natural language datasets, although I’m not there yet.

However, I can give some instructions and screenshots from what I’ve found along the way.

Getting started with NLTK:

- Download and install Python. The latest Python installation packages can be found at python.org, which includes OS-specific instructions.

- Download and install NLTK from NLTK.org, which also includes OS-specific instructions.

- For reference, there is a free introductory and practice-oriented book on NLTK here: http://www.nltk.org/book/

- The final step I can advise on is to import data and select the library from within the NLTK suite to work on it.

I myself haven’t gotten this far yet. It was difficult for me to install NLTK on my Mac and Linux machines using the NLTK instructions given for Unix-based systems. I think I have the program properly installed, but I’m not sure.

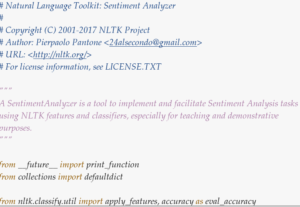

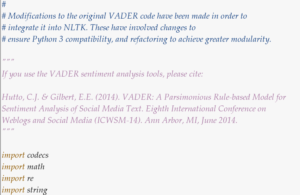

For further demonstrative purposes, however, here are screenshots of the documentation for two NLTK sentiment analysis tools. The first is from “Sentiment Analyzer,” which was developed to be broadly applicable in NLP, and and the second two are from “Vader,” designed to work on text from social media.

Okay, I think that’s all I have for now. I intend to keep working with NLTK throughout the semester — so if anybody is skilled with Python, or interested in valence/sentiment analysis, it would be great to talk with you about this!