Need:

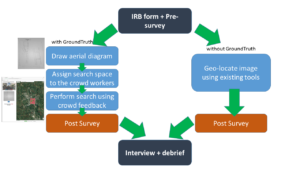

For a journalist, it is essential to verify news from several sources, with as little time as possible. Especially, verifying images and videos from social media is a frequent task for modern journalists [1]. The process of verification itself is tedious and time-consuming. Often the journalists have very little time to verify breaking news, as they are required to be published as soon as possible. Often times the experts have to manually search throughout the map to find a potential match. GroundTruth [2] is crowdsource based geo-location solution, which allows the users to use crowd workers in order to find the potential location of an image. The system provides a number of features, such as uploading an aerial diagram of the mystery image, drawing an investigation area on the Google Map for searching, dividing the search space into sub-regions on which the crowd workers cross-check the satellite view with the diagram, enabling the expert user to go through the crowd feedback to find a potential match. This novel approach of geo-locating, however, has not been tested with respect to the currently existing tools. My research is to design an experiment that would evaluate the experts’ reflection using GroundTruth.

Approach:

For the research purpose, I considered the scenario where the experts need to verify an image based on a social media post. These images on the social media post are usually associated with a location. My high-level goal was to replicate similar situation for our experiment. For this reason, I needed to set some ground rules for selecting the images for our experiment. I considered both urban and rural images for my experimental design. Based on the level of details for the urban images mentioned in the paper [2], I created a similar level of details for the rural images. These details are important to draw the diagrams of the images. I chose medium-level (3 and 4 level) of detailing for drawing diagram for both urban and rural images since the findings in the paper [2] suggest medium-level details performs best with the crowd workers. I wanted all of the images, both urban and rural images, to be from the same environment. For this purpose, I selected the “Temperate Deciduous Forest” as my preferred biome. The reasons behind choosing this biome are the location being in the Eastern United States, Canada, Europe, China, and Japan, having the moderate population, having four seasons, the vegetation being similar in both urban and rural images, etc. While selecting the images, I used GeoGuessr website, which provides street view images without any labels using Google Map API v3 [3]. I created a set of guideline for selecting these images in order to recreate the images for later purposes. I based the set of guideline on two criteria: 1) the number of unique objects, and 2) the number of other objects. Firstly, I identified the objects based on the details mentioned in the paper [2]. Among all the objects, I specified some as “unique” objects based on their unique features with respect to its surrounding. I ran some initial searching where these unique features contributed significantly more than its counterparts did in geo-locating the images. The guidelines were created to categorize the images into 1) Easy, 2) Medium, and 3) Hard. I chose four final images, two from the rural area and another two from the urban area, of medium level difficulty for my experiment. Finally, I set a guideline for the information on the location that would be provided. For this purpose, I chose the town level information for urban images and county-level information for rural images. In the final images, the area for the rural area was approximately 3 to 3.5 mi2, and the area for the urban area was approximately 2 to 2.75 mi2.

Benefit:

As a geo-locating system, GroundTruth [2] focuses on using crowd’s contribution in order to minimize long extensive manual tasks that the experts have to conduct themselves. The experimental design for this research can help evaluate the performance of the crowd workers while contributing to the geo-locating system GroundTruth. The GroundTruth system can be further modified in accordance with the responses received from the expert journalists. Focusing on the features, which the experts found useful, and modifying the ones where the experts failed to operate could make the system more efficient. Furthermore, our experiment design can be used as a benchmark for future evaluation of other geo-locating systems.

Competition:

The competition for the GroundTruth system are the existing tools that the experts use to verify the image location at present. Currently, the expert journalists use various tools for their verification, starting from Tineye, Acusense, etc. for image verification to Google Map, Wikimapia, TerraServer, etc. for geo-locating images. Although crowdsourcing based site Panoramio has been shut down by Google, another crowdsourced website Wikimapia can be used for investigating the location of an image. Tomnod is another website that uses the help of volunteers to identify an important object and interesting places in satellite images. Additionally, Google’s upcoming Neural Network based PlaNet has the ability to determine the location of an image with great accuracy.

Result:

For the result analysis, I chose two metrics; one is from their performance in geo-locating an image, and another through qualitative and quantitative survey questions. The performance would be analyzed by the completion time and the distance between the selected location and the actual location. The survey questions were set keeping in focus the expects’ reflection about the process, outcome, and their subjective experience of using GroundTruth system.

Discussion:

The experimental design discussed in this research can evaluate the way experts use the GroundTruth system compared to the existing tools. However, there are some potential challenges for the research, such as appropriate image selection, short time-limit, training of the GroundTruth system, backdated satellite imagery, etc. Nonetheless, this research is an initial step to understand the expert’s reflection while using crowdsourcing in geo-locating.

References:

[1] Verification Hand Book by Craig Silverman

[2] Kohler, R., Purviance, J. and Luther, K., 2017. GroundTruth: Bringing Together Experts and Crowds for Image Geolocation.

[3] GeoGuessr.com