This pair of papers falls under the topic of censorship in Chinese social media. King et al.’s “Reverse-Engineering Censorship” article takes an interesting approach towards evaluating censorship experimentally. Their first stage was to create accounts on a variety of social media sites (100 total) and sent messages worldwide to see which messages were censored and which were untouched. Accompanying this analysis are interviews with confidential sources, as well as the creating of their own social media site by contracting with Chinese firms and then reverse-engineering their software. Using their own site gave the authors the ability to understand more about posts that are reviewed & censored and accounts that are permanently blocks, which could not be done through typical observational studies. In contrast, the “Algorithmically Bypassing Censorship” paper, the authors make use of homophones of censored keywords in order to get around detection by keyword matching censorship algorithms. Their process, a non-deterministic algorithm, still allows native speakers to recover the meaning behind almost all of the original untransformed posts, while also allowing the transformed posts to exist 3x longer than their censored counterparts.

Regarding the “Reverse-Engineering” paper, one decision in their first stage that I was puzzled by was the decision to submit all posts between 8AM and 8PM China time. While it wasn’t the specific goal of their research, submitting some after-hours posts could generate interesting information about just how active the censorship process is in the middle of the night. That includes all of the potential branches – censored after post, censored after being held for review, and accounts blocked.

From their results, I’m not sure which part surprised me more: that 63% of submissions that go into review are censored, or that 37% that go into review are not censored and eventually get posted. I guess I need more experience with Chinese censorship before settling on a final feeling. It seems reasonable that automated review will capture a fair number of innocuous posts that will later be approved, but 37% feels like a high number. Their note that a variety of technologies are used in this automated review process would imply high variability in the accuracy of the automated review system, and so a large number of ineffective solutions could explain why 37% of submissions are released for publication after review. On the other hand, the authors chose to make a number of posts about hot-button (“collective action”) issues, which is the source of my surprise regarding the 63% number. Initially I would have expected a higher number, because despite the fact that the authors submit both pro- and anti-government posts, I would suspect that additional censorship might be added in order to un-hot-button these issues. Again, I need more experience with Chinese social media to get a better feeling of the results.

Regarding the “Algorithmically Bypassing” paper, I really enjoyed the methodology of taking an idea that activists are already using to evade censorship and automating it to use at scale by more users. Without being particularly familiar with Mandarin, I suspect that creating such a solution is easier in China than it would be in a language like English with fewer homophones. However, it did remind me of the images that are shared frequently on Facebook that are something like “fi yuo cna raed tihs yuo aer ni teh tpo 5% inteligance” (generally seen with better scrambled letters in longer words, in which the first and last letters are kept in the correct position).

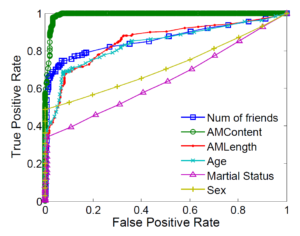

I felt that the authors’ stated result that posts typically live 3x longer than an untransformed equivalent censored post was impressive until I saw the distribution in Figure 4. A majority of the posts do appear to have survived with that 3x longer time statistic. However, the relationship is much more prevalent for surviving 3 hours rather than 1, while many fewer posts exist in the part of the curve where a post survives for 15 hours rather than 5. A case of giving a result that is accurate but also a bit misleading.