“A 61-million-person Experiment in Social Influence and Political Mobilization” by Bond et al. reports on the effects that political mobilization messages on social media have on elections and personal expression.

There were 61 million in the social message group, but 600 thousand for the other groups? I see that their methods are fairly sound in this research, but this kind of difference makes me consider how other company-sponsored research could become biased very easily. This should be a concern to many people, especially when news media have repeatedly hastily reported or drew unrelated conclusions out from published research articles. I feel the areas of academic research, news media, and corporations are becoming so interconnected that people are finding it difficult to tell them apart from each other.

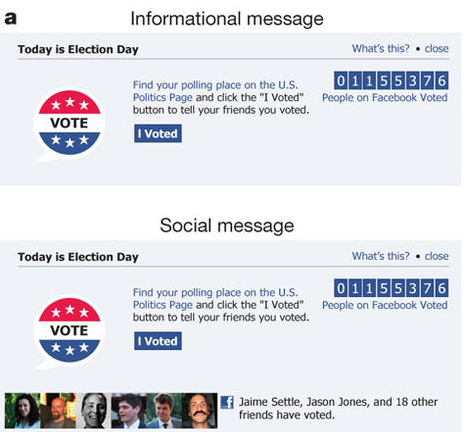

I see one issue with the design of the experiment. The informational message is still on the Facebook website, where nearly all information and actions available to the user are shareable with their Facebook friends. The assumption that many people could have would be that any available message or action given to the user can be shared to their friends. So participants might have wrongly assumed that it is another common social media sharing ploy, and not realized that the self-reported “I voted” would be kept confidential. I think this design in the experiment actually impacted the results to make it harder for the authors to come to these conclusions than necessary.

I think that in most instances, people should know when they are being studied. There can be exceptions to this if it has an obvious negative impact on the integrity of the research data. But participants might be more honest and accurate in their self-reporting if they knew it was being researched. Participants might be more mindful that their answers could lead to research and social changes that are unfounded and unjustified. This question should be investigated in meta-analyses of research methods, how participants perceive them, and how they change a person’s behavior. I understand that there is lots of previous work done on studies like this, but I think the results and conclusions from such research deserve to be so widespread that more people outside academia understand them. The importance of this makes me surprised that this isn’t quite ‘common knowledge’ yet. Maybe I shouldn’t be since the scientific method is another incredibly important process to understand that many people brush away.

“Experimental Evidence of Massive-scale Emotional Contagion through Social Networks” by Kramera, Guillory, and Hancock

If “Posts were determined to be positive or negative if they contained at least one positive or negative word…”, then how can mixed emotions in social media posts be measured? Simplifying emotions to simple and quantifiable categories can be helpful in many cases, but it should be justified. Emotions are much more complex than this, even in infants who have the most basic desires. Even using a one-dimensional scale instead of binary categorization can give a better degree of emotional range someone can feel.

The researchers also find that viewers that were exposed more emotional posts is connected to them making posts and being more engaged with social media later. I think this is alarming since Facebook and other social platforms are financially motivated to keep users online and engaged as much as possible. This contradicts recent claims by Facebook and other social media outlets that they wish to defend against purposefully outrageous and inflammatory posts. I see this as a major issue in the current politics and tech industry.