Cheng, J., Danescu-Niculescu-Mizil, C., & Leskovec, J. (2015, April). Antisocial Behavior in Online Discussion Communities. In ICWSM (pp. 61-70).

The paper presents an interesting analysis of users on news communities. The objective here is to identify users who engage in antisocial behavior like – trolling, flaming, bullying and harassment on such communities. Through this paper, the authors reveal compelling insights into the behavior of users who were banned. These insights are: banned users post irrelevantly, garner more replies, focus on a small number of discussion threads and post heavily on these threads. Additionally, posts by such users are less readable, lack positive emotion and more than half of these posts are deleted. Further, the reduction in text quality of their posts and the probability of the posts being deleted increase over time. Furthermore, the authors suggest that certain user features can be used to detect users that will be potentially banned. To this end a few techniques to identify “bannable” users are discussed towards the end of the paper.

First, I would like to quote from the Wikipedia article about Breitbart News:

Breitbart News Network (known commonly as Breitbart News, Breitbart or Breitbart.com) is a far-right American news, opinion and commentary website founded in 2007 by conservative commentator Andrew Breitbart. The site has published a number of falsehoods and conspiracy theories, as well as intentionally misleading stories. Its journalists are ideologically driven, and some of its content has been called misogynist, xenophobic and racist.

My thought after looking through Breitbart.com was, isn’t this community itself somewhat antisocial? One can easily imagine a lot of liberals getting banned in this forum for contending the posted articles? And this is what the homepage of Breitbart.com looked like in the morning:

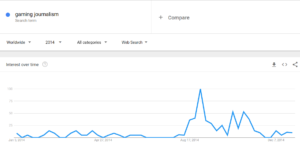

While the paper itself presents a stimulating discussion about antisocial behavior in online discussion forums, I feel that there is a presumption that a user’s antisocial behavior always results in them being banned. The authors discuss that communities are initially tolerant to antisocial posts and users, and this bias can easily be used to evade getting banned. For example, a troll may initially post antisocial content, switch to the usual positive discussions for a substantial period of time and return to posting antisocial content. Also, what’s to stop a banned user from creating a new account and return to the community, I mean all you need is a new e-mail account for Disqus? This is important because most of these news communities don’t require the notion of reputation for posting comments on their articles. On the other hand, I feel that the “gamified” reputation system on communities like Stack Exchange would act as a deterrent against antisocial behavior. Hence, it would be interesting to find who gets banned in such “better designed” communities and are the markers of antisocial behavior similar to those of news communities? An interesting post here.

Another question to ask is are there deeper tie-ins of antisocial behavior on online discussion forums? Are these behaviors predictors of some pathological condition with the human posting the content? The authors briefly mention these issues in the related work. Also, it would be interesting to discover, if a troll on one community, is also a troll on another community? The authors mention that this research can lead to new methods for identifying undesirable users in online communities. I feel that detecting undesirable users beforehand is a bit like finding criminals before they have committed the crime, and there may be some ethical issues involved here. A better approach might be to looks for linguistic markers that suggest antisocial themes in the content of a post and warn the user of the consequences of submitting it, instead of recommending users to be banned to the moderator, after the damage has already been done. This also leads to the question that what are the events/news/articles that generally lead to antisocial behavior? Are there certain contentious topics that lead regular users to bully and troll others? Another question to ask here is: Can we detect debates in comments to a post? This might be a relevant feature that can predict antisocial behavior. Additionally, establishing a causal link between the pattern of replies in a thread and the content of the replies may help to identify “potential” antisocial posts. A naïve approach to handle this might be to simply restrict the maximum number of comments a user can submit to a thread? Another interesting question maybe to find out, if FBUs start contentious debates, i.e. do they generally start a thread or do they prefer replying to existing threads? The authors provide some indication towards this question, in the section “How do FBUs generate activity around themselves?”.

Lastly, I feel that a classifier precision of 0.8 is not good enough for detecting FBUs. I say this because the objective here is to recommend for banning potential antisocial users to human moderators, so as to keep their manual labor and having a lot of false-positives will defeat this purpose in some sense. Also, I don’t quite agree with the claim that the classifiers are cross-domain. I feel that there will be a huge overlap between CNN and Breitbart.com in the area of political news. Also, the dataset is derived from primarily news websites where people discuss and comment on a articles written by journalists and editors. These might not apply to Q&A websites (For E.g. Quora, StackOverflow) or places where users can submit articles (For E.g. Medium) or more technically inclined communities (For E.g. TechCrunch).