The paper deals with a very relevant topic for social media – antisocial behavior including trolling and cyber bullying.

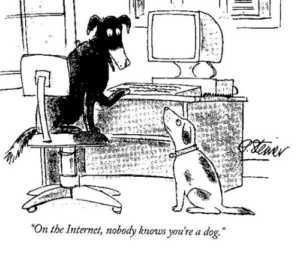

The authors make a point of understanding the patterns of trolls via their online posts, effects of the community on them and if they can be predicted. It is understandable that anonymity of the internet can cause regular normal users to act differently online. A popular cartoon caption says “On the Internet, nobody knows you’re a dog” . Anonymity is a two way street. You can act anyway you want, but so can someone else.

Community’s response to trolling behavior is also interesting, as it shows strict censorship behavior results in more drastic bad behavior. Hence, some communities use shadowban where the user doesn’t get know that it has been banned. Its posts will only be visible to itself and not others. Are those kind of bans included in FBUs ? The biasness of community moderators should be brought into question – some moderators are sensitive towards certain topics and ban users on even little offense. Thus, moderators behavior can also result in more post deletions and bans. Use of post deletion as ground truth is questionable here. One funny observation is that IGN has more deleted posts than reported posts. What could be the reason ?

The paper doesn’t cover all the grounds related to trolling and abuse. A large number of banning happens when trolling users abuse others over personal messages. The paper doesn’t seem to take that into account. The paper also does not include temporarily banned users. I believe including them will provide crucial insight into corrective behavior by some users and their self-control. I don’t think deleted/reported posts should be a metric for measuring anti-social behavior. Some people post on controversial topics or go off-topic and their posts are reported. This does not constitute as anti-social behavior but it will be included in such kind of metric based on deleted posts. The biasness of moderators is already mentioned above. Cultural differences play a role too. In my experience, many a times, a legitimate post has been branded as a troll behavior because the user was not very comfortable with English, or American use of a statement structure. For example, phrase “having a doubt” in Indian English communicates different things than that in American English. A better solution is analysis of discussions and debates on a community forum and how users react to it.

Based on the issues discussed above, the prospect of predicting anti-social behavior from only 10 posts is problematic. Users can banned based on such decisions. In communities like Steam (gaming marketplace), getting banned means losing access to one’s account and bought video games. Thus, banning users can have implications. Banning users over 10 posts could be over-punishment. A single bad day can make someone lose their online account.

In conclusion, the paper is a good step towards understanding trolling behavior but such multi-faceted problem cannot be identified on simpler metrics. It requires social context and a more sophisticated approach to identify such behavior. The application of such identifications also require some thought so that it is fair and not heavy-handed.