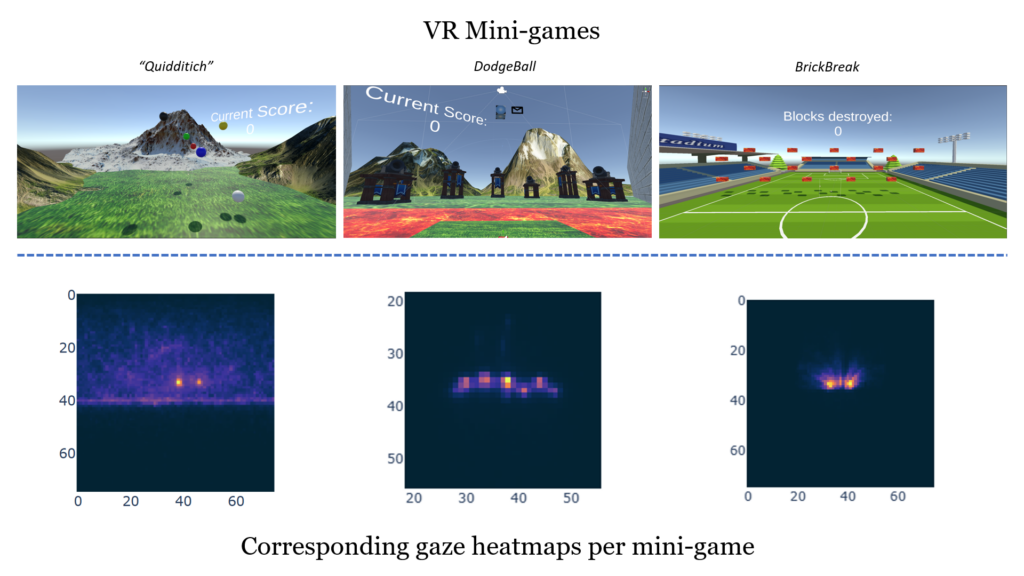

Using gaze data from a previous study, I am leading an exploratory effort to predict a user’s task in VR solely from their gaze data. Preliminary results show that we are able to confidently identify the task in real-time. Future work includes feature engineering to ensure more robust and granular inference of common AR/VR tasks (or “settings”).

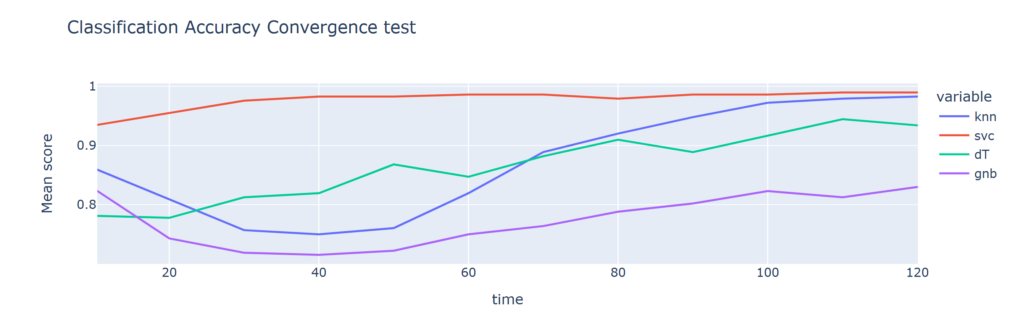

To further explore the efficacy of our gaze heatmap feature, we employ a few simple machine learning classifiers (K-Nearest Neighbors, Support Vector machine with an RBF kernel, Decision Tree, and Gaussian Naive-Bayes) to predict the task being performed throughout a user trial. The above graph shows promising results regarding task classification in a short period of time.

This work is in preparation for publication.