It is widely believed that AR glasses will be the next generation of personal information access devices. Lightweight AR glasses have the potential to give users hands-free access to any information, anytime, anywhere without the need for any physical displays. An intelligent AR interface will need to handle challenges such as avoiding the occlusion of important real-world objects, using real-world surfaces when appropriate, and determining how and when content should move along with the users. This shows the importance of an Adaptive AR Interface. However, to have such adaptive AR Interfaces, we need proper knowledge of the user’s context. A real-world object can be important to the user in one context, and completely unimportant in another. For example, if you are in a conversation, the content of their speech and where their face is are considered important. However, if you are reading a book in a café, the face or content of the speech of the person sitting in front of you at another table is of little importance to you (usually).

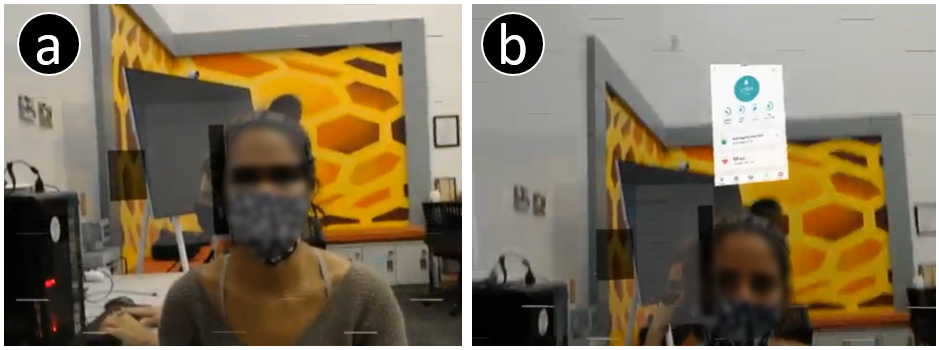

In this project, we designed an interface that detects when the user is in a conversation with someone else and based on the content of this conversation makes different virtual content available to the user to support their conversation. The interface also avoids occlusion of the other person’s face to ensure the user’s awareness of the surrounding social cues.

2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), 2022.