Paper-

[1] Examining the Alternative Media Ecosystem through the Production of Alternative Narratives of Mass Shooting Events on Twitter – Kate Starbird

Summary-

This paper uses twitter data to construct a network graph of various mainstream, alternate, government controlled, left leaning and right leaning media sources. It uses the structure of the graph to make certain qualitative observations about the alternative news ecosystem.

Reflection-

Some of my reflections are as follows:

This paper uses a very interesting phrase, “democratization of news production”, i.e. it very broadly deals with the fundamental struggle between power and freedom, and the drawbacks of having too much of either. In this case, an increasing democratization of news has weakened the reliability of the very information we receive.

It would be interesting to see what users who are involved in alternative media narratives follow outside the alternative media content, by analyzing their tweets in general, outside of the context of gun violence and mass shootings.

I found the case of InfoWars interesting – it was only connected to one node in the network graph. What were the reasons for that? Maybe infowars did not release much content about mass shootings, or maybe users who use it do not refer to other sources very often, or maybe it just produces content which no one else is producing, and thus sort of stands alone?

Only 1372 users tweeted about more than one alternative media event over the period studied. Maybe another longer-duration study can be conducted, since conspiracy worthy events happen rarely, and 10 months may not be enough to really find the network structure.

It was very interesting that this paper saw evidence of bots propagating fake news, and this paper also later claims that the sources were largely pro-Russia, which might give some insight into Russian tampering in the 2016 election. The paper also mentions that the sources were not more left leaning or right leaning, but the thing they had in common was an anti-globalist bias, and they insinuated that all of Western Government is basically controlled by powerful external interests, painting the west in a bad light.

The graph provides a sort of spatial link, but it would be interesting to also have a temporal link between source domains, to see what the originators of the information are, and how information propagates over time in these ecosystems. The paper also alludes to this in the conclusion.

The graph is dominated by aqua nodes, which also hints at selective exposure being prevalent here too, providing further evidence to the topic of discussion of last week’s papers, i.e. users who have a tendency to believe in conspiracies, will interact with alternative media more than they will interact with mainstream/other types of media.

It is very interesting that 66/80 alternative media sites cited mainstream media at some point, while not a single mainstream media site cited an alternative media site. It hints at the psyche of alternative media, painting a sort of “underdog” picture for these sources, where they are fighting against an indifferent “big media” machine, which I feel is quite appealing to people who are prone to believing in conspiracies.

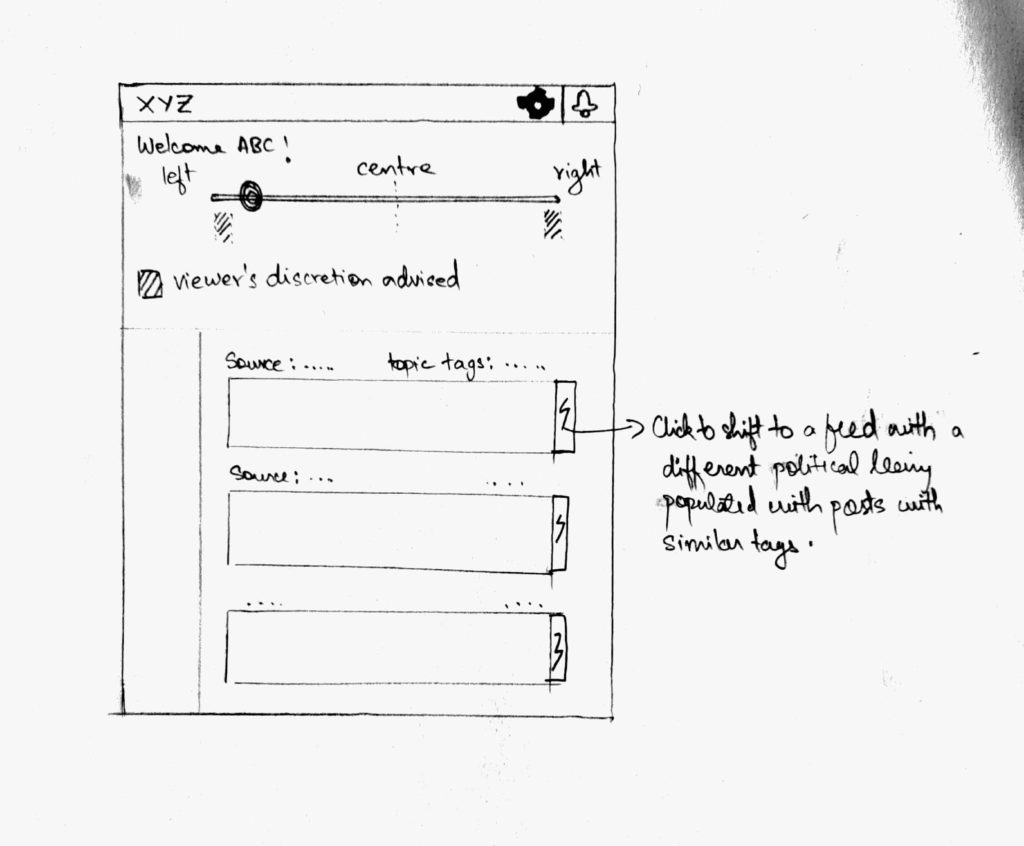

The paper states that belief in conspiracies can be exacerbated because someone thinks they have a varied information diet, but they actually have a very one-sided diet. This reminds me of the concept of the Overton Window, which is the name given to the set of ideas which can be publicly discussed at any given time. Vox had a very interesting video on how the Overton Window, after decades of shifting leftwards, is now beginning to shift to the right. This also has an effect on our information diet, where what we feel might be politically neutral, might actually be politically biased, because the public discourse itself is biased.