How we humans select information depends on a few known factors which make the information selection process biased. This is a well established phenomenon. Given such cognitive biases exist and we live in a democratic system in an age where information overload exists, how does it impact social conversation and debate?

As mentioned in the talk this selective exposure or judgment can be used for good, for example to increase voter turnout. But this gets me thinking, is this nudging sustainable? Relating to the discussions after reading reflection #1, about different kinds of signals, is this nudge an assessment or conventional signal? One could definitely think about an instance where users exposed to barrage of news instances which bolsters their positions get desensitized, resulting in neglection of these cues.

The portion of the talk where the speaker discusses about behaviour of people when exposed to counter-attitudinal positions is an interesting one. This portion coupled with one of the project ideas proposed by me got me thinking about a particular news feed design.

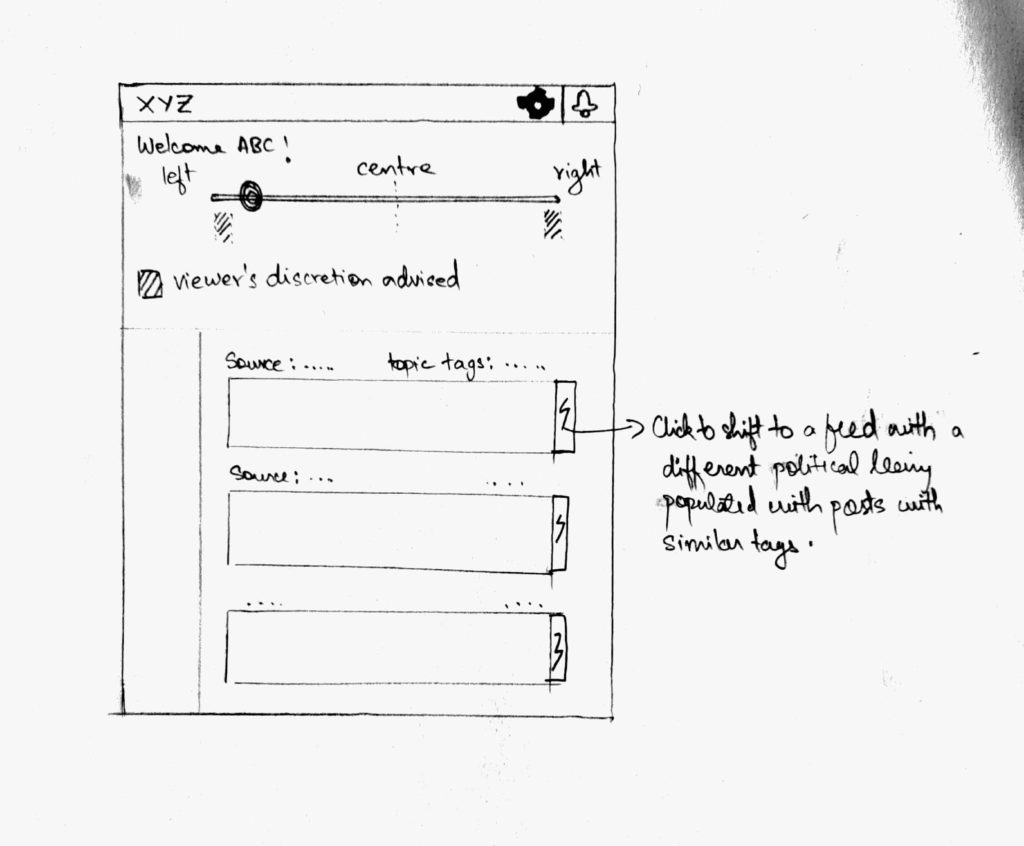

Given that we solve the issue of mapping the position of different news sources in the political spectrum, in order to expose users to sources from outside their spectrum, we could design a slide bar whose position will decide the news sources which will populate the news feed as shown above. The lightning symbol next to each article allows one to shift to a feed populated by articles talking about the same topic. The topic tags which are found through keyword extraction (Rose et al. (2010)) combined with the time of publishing of the article could help us suggest news articles talking about the same issue from a source with a different political leaning.

Given such a design, we could identify the trends on how and when the users enter the sphere of counter-attitudinal positions which is an idea the speaker mentions in the video.

Do people linger more on comments which go against their beliefs or which suits their beliefs? One could run experiments on consented users to see which comments they spend more time reading. Pick and analyze posts which top the list, accounting for the length of the post. My hypothesis is that comments which go against one’s belief would warrant more time as one would take time to comprehend the position first, compare and contrast with their own belief systems and then take action which can be replying or reacting to the comment. If using temporal information is useful, it could pave way to a potential method through which one can find “top comments”, uncivil comments(more time taken) along with explicit content(less time taken). During the extraction of top comments one has to have a human in the loop along with accounting for the personal political position in the spectrum.

The discussion by the speaker on “priming” of users using the stereotype content model, is extremely fascinating (Fiske et al. (2018)). Given that priming has a significant impact on the way users react to certain information, can it be possible to identify “priming” in news articles or news videos?

One could build an automated tool to do so to detect and identify the kind of priming, may it be “like”, “respect” or other orthogonal dimensional primes. The orthogonal prime could be “recommend” the one the speaker points out in her research (Stroud et al. (2017)). Given such an automated tool exists, it would be interesting to use it on large number of sources to identify these nudges.

References

Susan T Fiske, Amy JC Cuddy, Peter Glick, and Jun Xu. 2018. A model of (often mixed) stereotype content: Competence and warmth respectively follow from perceived status and competition (2002). In Social Cognition. Routledge, 171–222.

Stuart Rose, Dave Engel, Nick Cramer, and Wendy Cowley. 2010. Automatic keyword extraction from individual documents. Text Mining: Applications and Theory (2010), 1–20.

Natalie Jomini Stroud, Ashley Muddiman, and Joshua M Scacco. 2017. Like, recommend, or respect? Altering political behavior in news comment sections. New Media & Society 19, 11 (2017), 1727–1743.