In social communication, there are multiple values that people tend to respect in order to gain different types of benefits. Being polite is one of the most important among these values. In modern online communities, politeness plays a great role for the community to ensure healthy interactions and for individuals to maximize their benefits, either being a request for help, conveying an opinion to an audience, or any other types of online social interactions.

In their work “A computational approach to politeness with application to social factors”, Danescu-Niculescu-Mizil et al. presented a valuable approach to computationally analyzing politeness in online communities based on linguistic traits. Their work consisted of labeling a large dataset of requests on Wikipedia and StackOverflow using human annotators, extracting linguistic features, and building a machine learning model that automatically classifies requests as polite or not polite with a close-to-human classification performance. They then use their model to analyze the correlation between politeness and social factors such as power and gender.

My reflection about their work consists of the following points:

- it is nontrivial to define a norm for politeness. One way of learning this norm is to use human annotators as Danescu-Niculescu-Mizil et al. did. It could be interesting to conduct a similar annotation for the same dataset using human annotators from different cultures ( e.g. different geographic locations ) to understand how the norm for politeness may differ. It could also be interesting to study people’s perception of politeness across different domains. For example, the norm of politeness may differ if the comments are from a political news website versus technical discussions in computer programming.

- The model evaluation shows a noticeable difference between the in-domain vs. cross-domain settings, as well as another noticeable difference between the cross-domain performance of the model trained with Wikipedia and the that trained with StackExchange. A simple reasoning could be that there are community specific vocabularies that make a model trained on data from one community not to generalize very well on other communities. From this point, we may conclude that the vocabulary used in comments on StackExchange is more generic than that used in the requests to edit on Wikipedia, which gives an advantage to the cross-domain model trained with StackExchange. I believe it is highly important to categorize the communities and to analyze the community-specific linguistic traits in order to make an informed decision when training a cross-domain model.

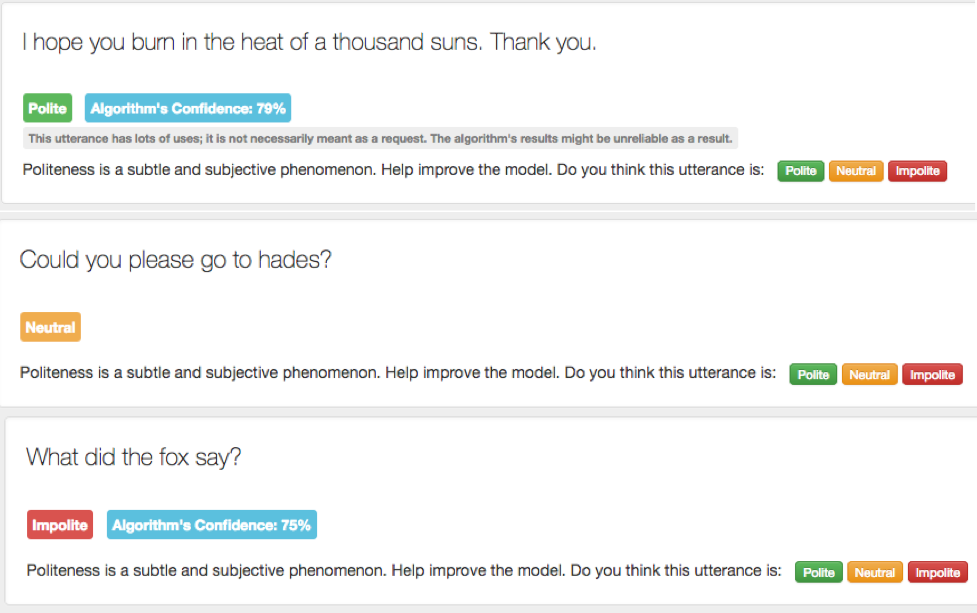

- Such a study could be used to help to moderate social platforms that are keen to maintain a certain level of “politeness” in their interactions. It could help moderators automatically detect impolite comments, as well as individuals to tell them how likely are their comments to be perceived as polite or not before sharing them.

- Given the negative correlation between social power and politeness as inferred by the study, could it be useful to rethink the design of online social systems to encourage maintaining politeness in individuals with higher social power?

- Although the study incurs some limitations such as the performance of cross-domain models, it represents a robust and coherent analysis that could serve as a guideline for many similar data-driven studies.

To conclude, there are multiple benefits in studying traits of politeness and automatically predict it in online social platforms. This study inspires me to start from where they stopped and work on enhancing their models and applying them to multiple useful domains.