-

Danescu-Niculescu-Mizil, C., Sudhof, M., Jurafsky, D., Leskovec, J., & Potts, C. (2013). A computational approach to politeness with application to social factors.

-

Zhang, J., Chang, J. P., Danescu-Niculescu-Mizil, C., Dixon, L., Hua, Y., Thain, N., & Taraborelli, D. (2018). Conversations Gone Awry: Detecting Early Signs of Conversational Failure.

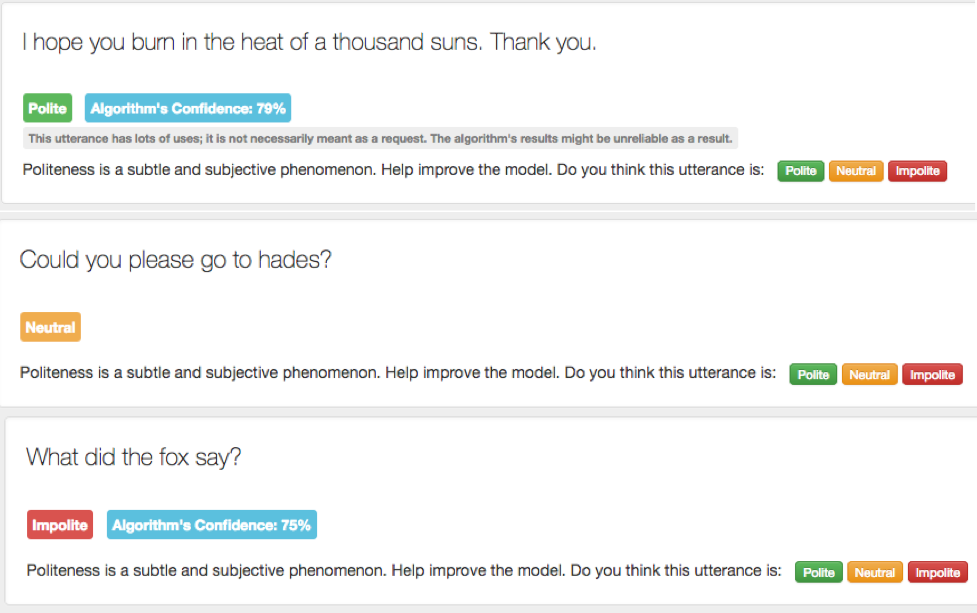

Authors in the first paper strive to develop a computational framework which identifies politeness, or the lack thereof, in Wikipedia and Stack exchange. They uncover connections between politeness markers and context as well as syntactic structure to develop a domain-independent classifier for identifying politeness. They also investigate the notion that politeness is inversely proportional to power: The higher one ranks in a social (online) setting, the less polite they tend to become.

Reflection:

- In introduction section of the paper, authors mention their findings about established results about relationship between politeness and gender. It also claims the prediction-based interactions to be applicable to different communities and geographical regions. However, I didn’t seem to quite understand how the results relate to gender roles in determining politeness. I am also skeptical about the said computational framework to be applicable to different communities and geographical regions since different languages vary greatly by their politeness markers, and have different pronominal forms and syntactic structure. Also accounting the fact that all human annotators in this experiment were residing in the US.

- Stemming from the above comment is another research direction that seemed interesting to me, does a particular gender tend to be politer than the other in discussions? What above incivility? Is gender also a politeness marker in such case?

- Authors talk about politeness and power being inversely proportional to each other, by showing the increase in politeness of unsuccessful Wikipedia editors after the elections. This someone doesn’t seem intuitively correct. What if some unsuccessful candidates feel that the results are unjust or unfair, will they still continue being politer than their counterparts? The results seem to indicate that all such aspiring editors keep striving to achieve the position by being humble and polite, which might not always be the case.

- Research on incorporating automatic spell checking and correction by finding word equivalents for misspelled words can help reducing false positives in the results produced by Ling. (Linguistically Informed Classifier)

Second paper talks about detecting the derailing point of conversations between editors on Wikipedia. Although authors talk at length about the approaches and limitations, there did not seem to be (at least to me) a strong motivation for the work. Possible applications that I could think of are as follows:

- Giving early warnings to members (attackers) involved in such conversations before imposing a ban.The notion of ‘justice’ can then be prevailed in the community, by making commenters aware of their conduct beforehand.

- Another application can be muting/shadow banning such members by early detection of conversations which can possibly go awry, to maintain a healthy environment in discussion communities.