Natalie Jomini Stroud. “Partisanship and the Search for Engaging News”

We can two take lessons from Stroud work in their approach:

- Using sociological bent in studying how people make decisions, and how these decisions are reinforced, and therefore how they can be changed.

- The impact of tone, opposing view points, engagement by journalists and the interventions by moderators (carrot + stick approach) towards the impact of discourse online.

And use them to consider a “news diet” that in conjunction with previous reading on Resnick approach to showing news bent, to propose a design featuring a nutritional label.

The design considerations are/should be in line with the hypothesis/ concerns laid out in Strouds talk, that is:

- Something that does not lend to people’s predilection. If you confirm that I am a conservative, I am proud to wear that label regardless of whether that is a good thing or not.

- The design should not try to change a person opinion:

a) It is dangerous and may backfire

b) First amendment – prescribes that everyone has a right to an opinion. Civility != opinions agreed with

c) The entire moderation structure is subjective - It should nudge towards the willingness to “listen” to the other team

- Nudge the opposing side to contribute in a “healthy” constructive way

- Points 3 and 4 are a necessary and supportive loops.

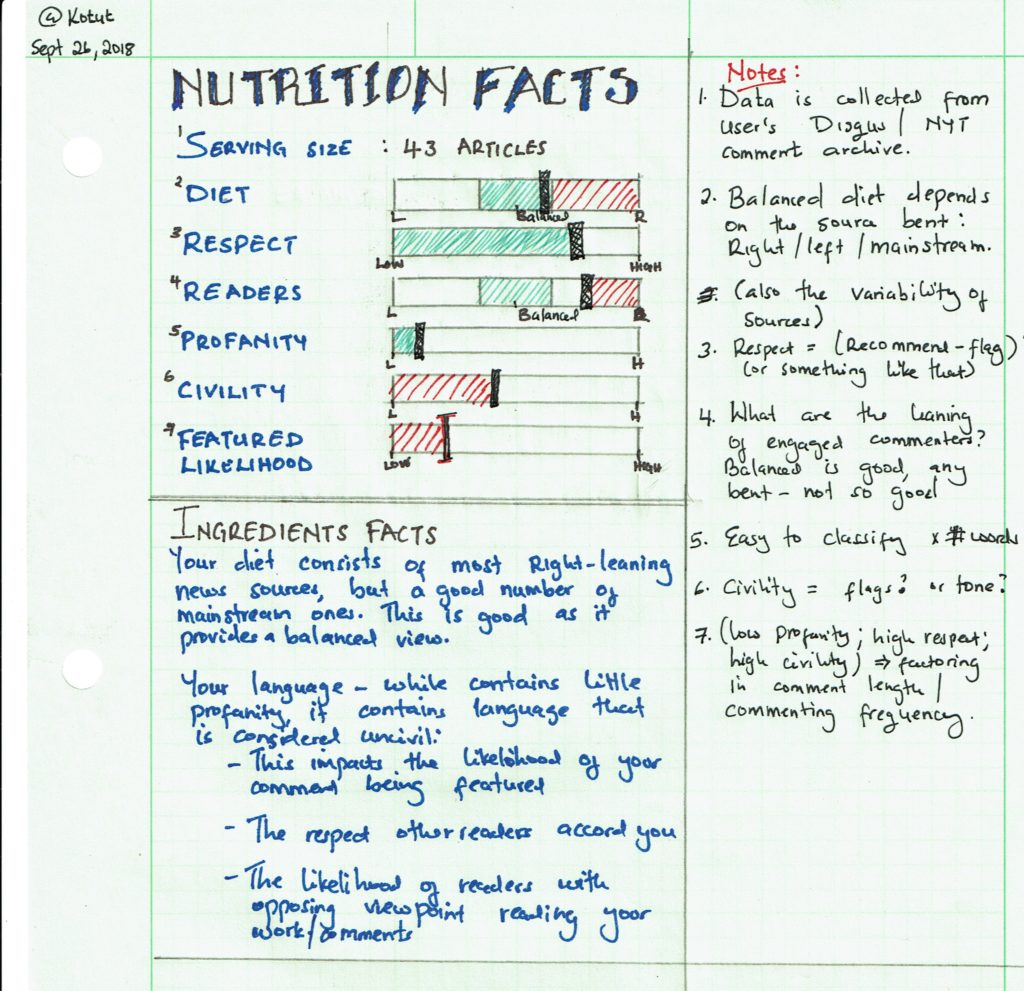

We can encapsulate these ideas in a Nutritional label – a mechanism that a user knows/understands the functions of at a glance. This fact is appreciated and has been used in previous work to classify online documents, articulate rankings and reveal privacy considerations.

As we do not need to explain the function of the labels, we are able to concentrate on providing pertinent information to the user that can be appreciated at a glance, and that can also feature buttons (as recommended by Stroud) to nudge users towards a certain behavior.

The design is included below:

PS: A transcription of the written notes

Ingredient Facts

- Your diet consists of mostly right-leaning news sources, but also a number of mainstream ones. This is good, as it provides you a balanced view of the news

- Your language: While it contains little profanity, it contains language that is considered uncivil.

- This impacts the likelihood of your comment being featured

- The respect other readers accord you

- The likelihood of readers with opposing viewpoints reading your comments.

Notes

- Data is collected from user’s comment archive e.g. Disqus/NYT

- “Balanced diet” depends on the bend of the news source: right/left/mainstream, together with the variability of sources

- “Respect” is a factor of “flagged comments” and “recommended”

- Commenter’s audience: How do they lean?

- Civility and Profanity are based on textual features

- “Featured likelihood” can be considered a reward, something to cement user’s respect i.e. the carrot.