Eye gaze patterns vary based on reading purpose and complexity, and can provide insights into a reader’s perception of the content. We hypothesize that during a complex sensemaking task with many text-based documents, we will be able to use eye-tracking data to predict the importance of documents and words, which could be the basis for intelligent suggestions made by the system to an analyst. We introduce a novel eye-gaze metric called `GazeScore’ that predicts an analyst’s perception of the relevance of each document and word when they perform a sensemaking task. We conducted a user study to assess the effectiveness of this metric. We explore potential real-time applications of this metric to facilitate immersive sensemaking tasks by offering relevant suggestions.

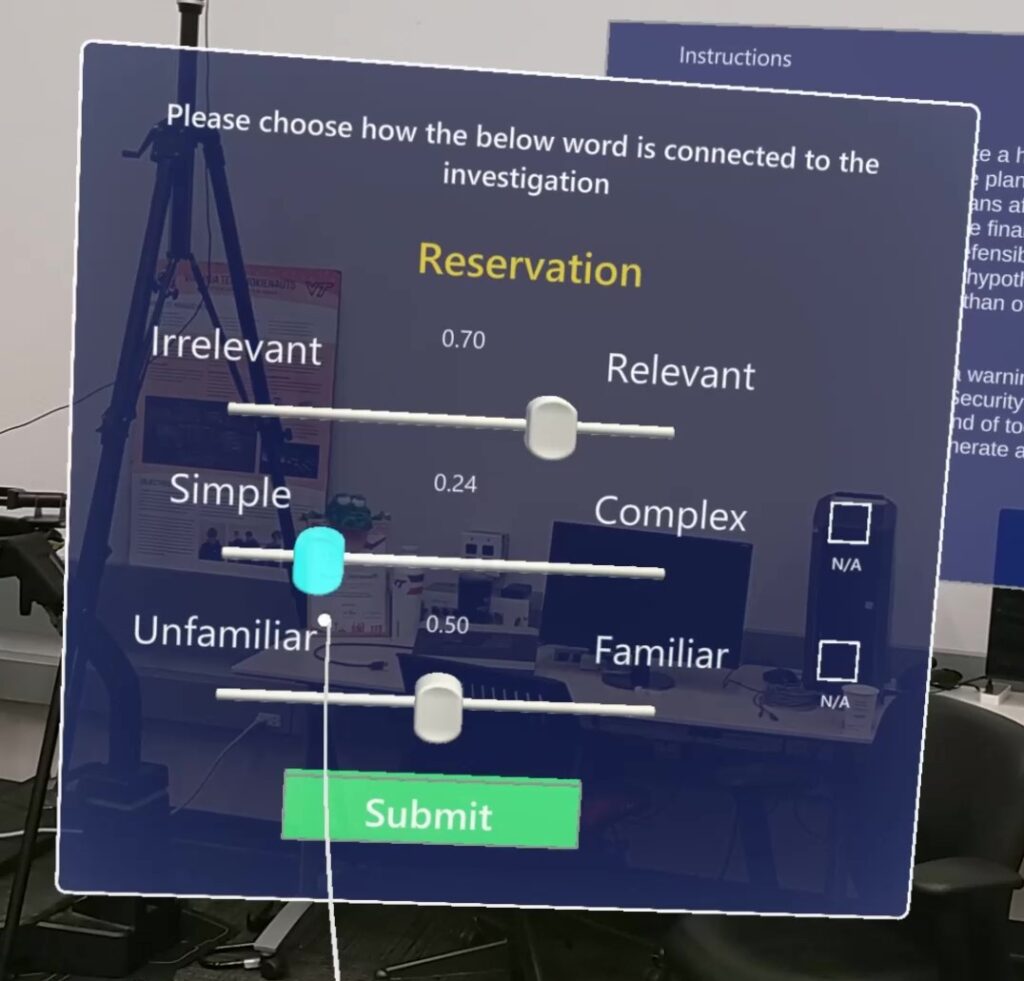

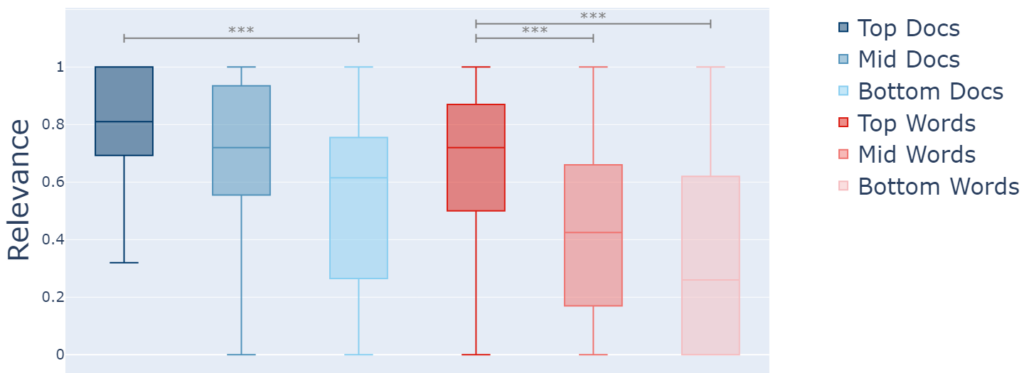

The system retrieves a set of words and documents with different levels of predicted relevance determined by GazeScore. The users go through each of them and rate them based on their perceived relevance.

We found strong evidence that documents and words with high GazeScores are perceived as more relevant, while those with low GazeScores were considered less relevant.

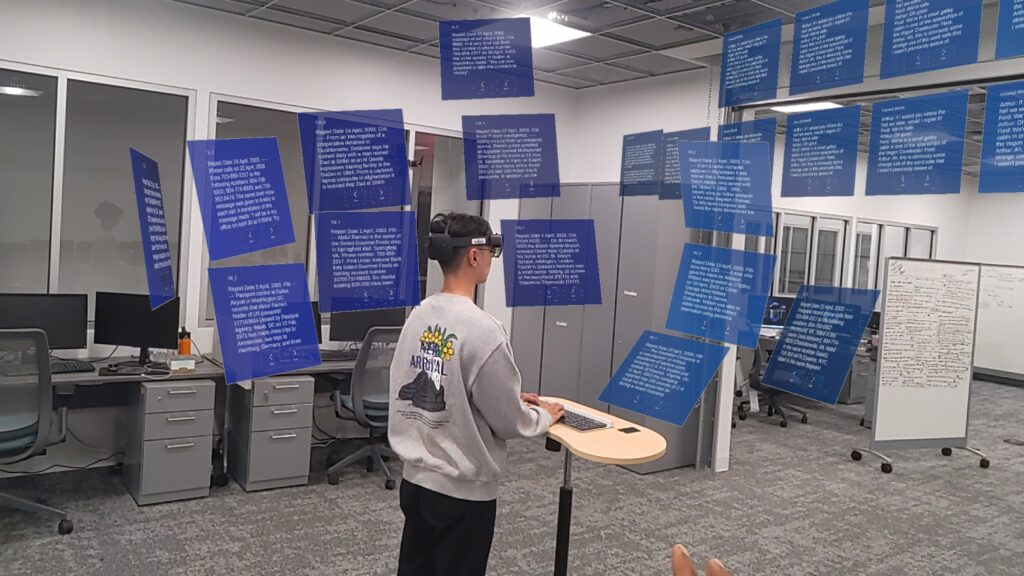

Here’s an overview of our system and the flow of the user study.

Our findings from this study are scheduled to be presented at the 22nd IEEE International Symposium on Mixed and Augmented Reality (ISMAR).

Proceedings Articles

Evaluating the Feasibility of Predicting Information Relevance During Sensemaking with Eye Gaze Data Proceedings Article

In: 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 713–722, IEEE 2023.