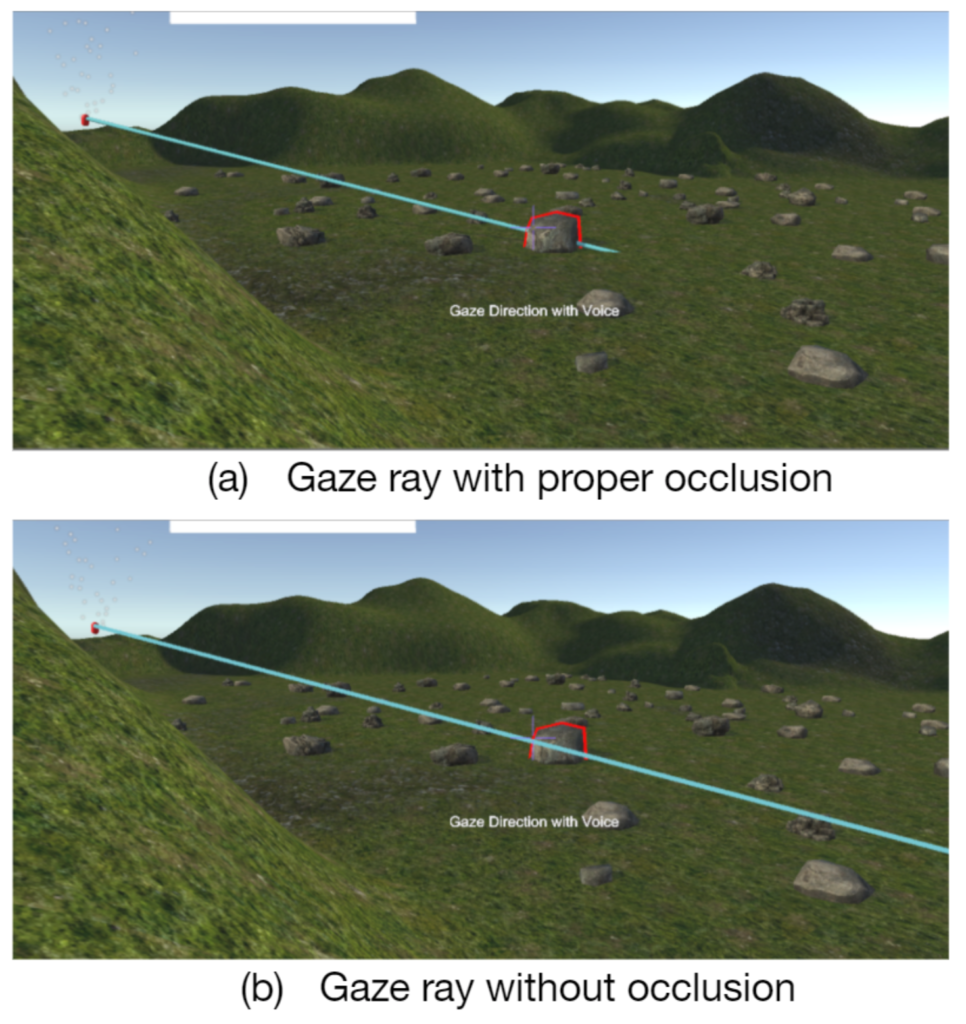

Joint attention on an object of mutual interest is a common requirement in many collaborative tasks. Living in a three-dimensional world, such joint attention usually involves the communication and confirmation of an object’s location. Such collaborator awareness problem of communicating an object’s spatial location is relatively easy when two collaborators are close to each other. One only needs to point to the target and confirm pointing via vocal communication. At large distances, collaborators can use laser rays to enhance pointing effect. In this case, the observing collaborator performs a visual searching task to find the point of intersection between the laser and target object. However, applying the same gaze ray technique in Augmented Reality (AR) is not as easy. To correctly display a 3D virtual ray, the AR system needs a model of the environment so that the ray can properly occlude, be occluded by, and intersect with the real world. Environment information, which is often stored as geometry models, is not always reliable or does not exist at worst case. When no environment model is available, the AR system can only visualize a virtual ray which does not interact with any real-world object and appear on top of everything along its path. In such model-free AR, the virtual ray cannot correctly intersect with the target object.

To address this issue, we use two enhancements that help a wide-area AR user reaching consensus on object of interest with collocated remote collaborator and/or understand the pointing direction.

Visual Enhancement

By asking a remote partner to cast more than one virtual ray pointing the object of interest, it can effectively reduce the probability of visual ambiguity raided be missing occlusion. This reduces the difficulty of visual search task where reaching visual consensus becomes searching for object interacting with all pointing rays.

Orientational Enhancement

This enhancement is meant to help user understand the pointing direction more easily through a set of augmented bars. The bars are completely parallel to the pointing ray indicated by the collaborator. From the user’s view, the first bar locates at the chest height, while the others are equally separated in the perpendicular direction of the virtual ray. This helps the user to better understand the orientation of the pointing ray and therefore reason for the correct target of interest.

Sponsor

Conferences

Gaze direction visualization techniques for collaborative wide-area model-free augmented reality Conference

Symposium on Spatial User Interaction, 2019.