Much research in computer graphics aims to create physically based motion that is visually plausible—motion that looks correct given our experience with the physical world and understanding of physical laws. It tries to present convincing behaviors of individual entities (e.g., trajectory affected by gravity) and believable interactions between different entities (e.g., collision and deformation). Displaying plausible motion in virtual reality (VR), however, is even more challenging. Unlike animation or traditional video games, VR directly places the user’s body into the virtual environment (VE) as another entity in the physical system. To maintain the overall physical integrity, the interaction between the human body and other virtual entities should also conform to consistent physical laws. Generating such conformity requires not only correct visual information of motion, but also corresponding stimulation both on and inside the human body through somatosensory information. This project proposes the concept of physics coherence to describe the level of compliance to physical laws in VR object manipulation

We present the design of two techniques to achieve high levels of physics coherence:

- The first one is physically coherent virtual hand. With this technique, a zero-order mapping between the hand’s movement and the object’s movement is simulated using the physics engine, so the control mechanism is similar to a naive simple virtual hand, while physics-based effects are enable (including gravitiy, collision, pseudo-haptic feedback of weight variation by adjusting C/D ratio).

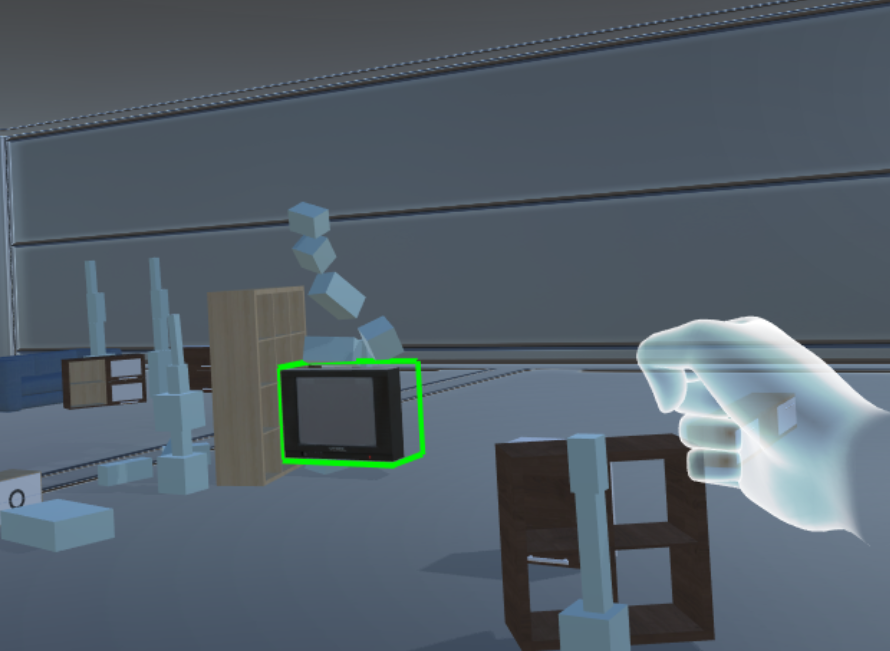

- The second one is called Force Push (T3 in the video), in which expressive hand gestures are used to apply force to the object. Using a novel algorithm that dynamically maps rich features of hand gestures to the properties of the physics simulation, this technique forms a physics-based relationship between visual and kinesthetic cues. This is because the amount of force (or torque) applied is determined by the amplitude and speed of gestural input—to move a heavier object by the same distance, the user has to output more force kinesthetically by performing hand gestures with a larger amplitude and faster speed (e.g., pushing harder to translate a heavier object). From a user perception point of view, this can be seen as an attempt to realize pseudo-haptic feedback under this specific control metaphor

We compared these two with a naïve simple virtual hand technique, measuring a variety of user experience factors. The experimental result provides empirical evidence that physics coherence has a positive influence on hedonic user experience. We summarize our lessons learned from this experiment through design guidelines that emphasize physics-driven motion, physically based mapping between kinesthetic cues and object motion, and relatable metaphors. We also proposed the design of two techniques that illustrate the guidelines, namely the Tennis Ball controller and the Slingshot controller.

Journal Articles

Force Push: Exploring Expressive Gesture-to-Force Mappings for Remote Object Manipulation in Virtual Reality Journal Article

In: Frontiers in ICT, vol. 5, pp. 25, 2018.