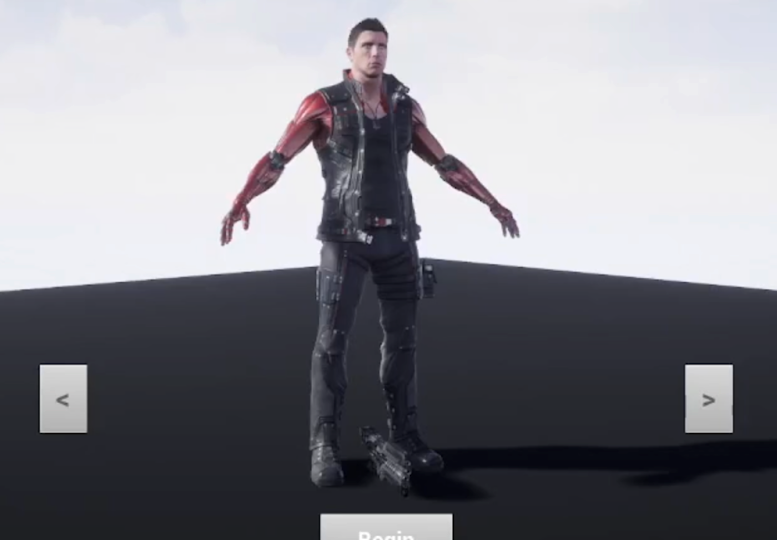

Live Action gives dancers the ability to dance in a virtual environment and avatar of their choosing while using special effects to enhance their performance. Our goal is that the avatar of their choice provides a strong sense of personalization so that the character feels as though they are best represented on screen when they watch themselves perform. Users first get to choose their dancing character/avatar through a selection of both male and female avatars. They can click on the left and right arrow keys to shift between different outfits as well. The users that have used our tool thus far, have communicated that they loved choosing flowy outfits that matched their own aura. This is because they like seeing the costumes flow with the different steps which makes the performance within the UI look smoother. In respect to the actual avatars, if users are unsatisfied with the current avatars available to them, they can describe their ideal dancing avatar and actually choose from a wide selection of avatars from https://www.mixamo.com, which we can then import onto our character selection UI. Doing this ensures that we are giving our users the best tools and features available to them for enhanced personalization.

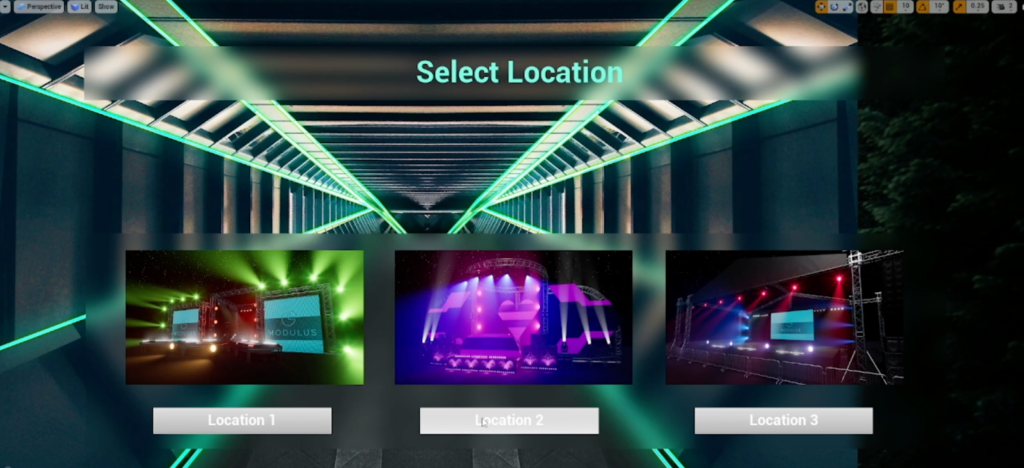

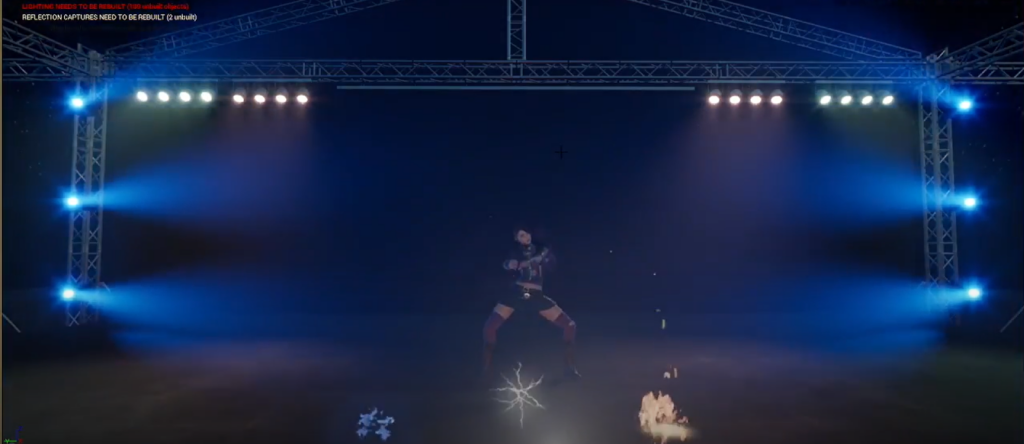

The UI is built so that the users are guided through each step of choosing their avatar, and environment, and then finally creating their masterpiece performance. After character selection, the dancers get to select the location of where they want to dance. Currently, there are three-stage environments that dancers can choose from. Based on what dance style the user is skilled in and wants to perform, they can choose a virtual environment that will enhance their chosen style. For example, our main dancer/user wanted an extravagant stage view for her song “Levitating” by Dua Lipa since it gives the girl boss energy and utilizes a bold color palette like hot pink and purple. After choosing an avatar and virtual environment, the user/dancer will be taught how to pick up virtual particles to enhance their performance. Our main user/dancer wanted to utilize all three particles: fire, blue fire, and electricity for a power-packed performance. She also wanted to use all three so she can experiment and see which looked the best with the type of movements she was doing. The particles have a fading effect so that the performance is clean. For users that are performing something that they wish to invoke more intense emotions from the audience, they have the ability to pick up multiple particles at once to create that powerful effect. The user’s entire performance can be recorded. After the user performs with their elements of choice while utilizing the special effect particles, they can share it with their friends and family, or even on social media. Our tool will be useful for dancers who want to improve their skills in hip hop, ballet, etc., artists who want to create a wild abstract form of art with special effect particles, and even for general audience members who just want to get in the suit and experience something new and innovative. Our main dancer/user stated that she would love to post her recording using our Live Action Tool on TikTok with a split-screen effect to show the video of her dancing with the suit, and her virtual environment performance side by side for the audience and in her case, the Tiktok viewers to simultaneously watch. Our main goal is to provide a tool that is open to anyone who wants to express themselves in a new reality and be able to share their art with the world.

Team: Amirtha Krishnan, Aditi Yelne, Sonia Chaudhary, Shwetha Radhakrishnan

Technical Implementation:

The project is utilizing a system called Optitrack, which is a state-of-the-art motion capture system used for virtual productions, movement science, virtual reality, robotics, and animation. The optitrack system captures the movements of the dancer in real time and livestreams it into the Unreal Engine project. Those movements get casted onto the chosen dancer’s character in the chosen environment.

Images:

References:

Video for motion capture for FBX import to unreal engine: https://www.youtube.com/watch?v=RSE7d4Wrozg&t=158s&ab_channel=RazedBlaze

Animations and particles:

https://www.youtube.com/watch?v=YXOhPXhhVRU

Character Selection:

https://www.youtube.com/watch?v=sHq69iYfd9E&list=RDCMUCsS5i15vvUbwfr_1JdRKCAA&index=2

Picking up and swapping items:

https://www.youtube.com/watch?v=b6XBzGWoe0I

Audio:

https://www.youtube.com/watch?v=ii0r6AzXUFM

Render a Video: